There’s been a lot of noise in the two weeks since Apple announced the new iPhone X and its new Face ID solution. Much real and virtual ink has been spilled about the technology’s dire consequences for privacy and security, much like in the aftermath of the Touch ID announcement in 2013. People don’t seem to recall the controversy of Touch ID, or how most of the doomsayers at that time were largely proven wrong in their assessment of that solution.

This post attempts to step back and provide an omnibus examination of the concerns with Face ID.

What is Face ID?

Face ID is a biometric system for recognizing a user against a biometric template of their face, previously captured during device configuration. Face ID relies on Apple’s TrueDepth camera, a sensor capable of measuring 3D images of a user’s face with a combination of an invisible infrared flood illuminator, an infrared dot illuminator, and a camera that can detect infrared.

The process uses structured light scanning to extract 3D data from 2D images and projected light patterns. Infrared light allows the process to function under a variety of lighting conditions, as well as to sense through obstacles such as sunglasses.

Is Face ID Secure?

Evaluating security requires a structured evaluation framework (such Schneier’s attack trees approach), but a high-level approximation of the answer can be achieved by answering three questions:

- Where is biometric data stored?

- Where are biometric operations performed?

- How effective is biometric matching?

Where Is Biometric Data Stored?

Apple has released its Face ID Security Guide, which confirms earlier statements by Craig Federighi indicating that the security model of Face ID is very similar to that of Touch ID. Like Touch ID, biometric templates captured by the TrueDepth camera are processed and stored locally in the Secure Enclave, a dedicated security coprocessor. The biometric data is never exported from the Secure Enclave to the main operating system processor.

Where Are Biometric Operations Performed?

As with the biometric templates, and all biometric matching and training for Face ID also occurs within the Secure Enclave. However, biometrics can do more than verify a user against a stored biometric template; they can also be used to gate access to sensitive data. But how such sensitive data is protected?

In the case of Touch ID, iOS enabled the fingerprint sensor to be used to gate the use of a cryptographic key created by an application. This feature allowed applications to generate keys to protect data, and required the user to touch the fingerprint sensor to approve the use of the key for a cryptographic operation. As with the biometric operations, all cryptographic operations for Touch ID are performed in the Secure Enclave (although it turns out that private keys could be exported until iOS 9 added a new flag to make private keys non-exportable).

Face ID has followed a similar pattern, allowing Face ID to be used to gate Keychain-protected items using the existing touchIDAny or touchIDCurrentSet ACL flags. Applications that support Touch ID will automatically support Face ID without requiring any code changes by the developer.

How Effective Is Biometric Matching?

An additional concern in biometric systems is how well the system defends itself against a malicious attacker presenting a fake biometric. These systems extract features from the sensor data to create a biometric template during the set up (enrollment) process; to check someone’s identity, the system captures another set of data from the sensor, extracts features, and then compares how “close” these features are to the stored template’s features.

As a result, these systems are probabilistic, otherwise they would never work, even with the right finger / biometric. Subsequent captures, even from the same biometric are never identical for a variety of reasons (limitation in the resolution of the sensor, dirt / environmental noise, positioning of the biometric, etc.), and hence there’s always “wiggle room” in the matching process. It is through this gap that attackers attempt to squeeze themselves.

Could a hacker bypass Face ID? In all likelihood, the answer is yes, if history is to be any guide: less than a day after the public release of Touch ID-enabled devices, German hacker “starbug” demonstrated how to spoof the Touch ID sensor using a lifted fingerprint, wood glue, and a printed circuit board.

A year later, the same hacker demonstrated how to replicate fingerprints using photos taken with a standard digital camera. The 2D face recognition on the Samsung Galaxy Note 8 was similarly fooled by a 2D photo, an attack that Face ID’s use of 3D sensing and infrared defends against.

With Face ID, spoofing concerns have again been raised. However, based on the information released by Apple so far, Face ID is likely to be more secure than Touch ID. Touch ID has a false acceptance rate (FAR) of 1/50,000, assuming only one enrolled fingerprint; enrolling multiple fingers increases the false acceptance rate (two fingers = 1 / 25,000, three = 1 / 12,500, etc.). In contrast, Apple has stated Face ID has a FAR of 1 / 1,000,000. And, as pointed out in the wake of the Touch ID spoofing attacks, bypassing a device-based biometric matching process requires significant effort and, more importantly, physical access to the device. Without physical access to the device, possessing a biometric capable of spoofing the sensor is useless.

What Are the Real Risks of Face ID?

Attacks on the Secure Enclave

The security of Face ID and iOS as a whole is heavily dependent on the security of the device operating system, the kernel, to both enforce authorization rules around data, and protect device secrets. The Secure Enclave plays a central role in ensuring the protection of data, and the enforcement of authorization rules on the device. Even specialized security environments may have vulnerabilities that allow an attacker to gain access to a device and its data (as we saw with the successful attacks on the Qualcomm implementation of TrustZone, as cousin of the Secure Enclave).

The bar for such attacks is very high, and Apple continues to make significant investments to raise that bar. Should such an attack be possible, it’s likely that only the most adept attackers (such as nation states) would be capable of exploiting it.

In this case, this is not a problem that is unique to Face ID. What might be unique is that attackers may be able to extract copies of the 2D infrared images of your face, and the biometric features extracted from the depth maps created by the TrueDepth sensor. That information might allow an attacker to gather facial information for a large-ish number of end users. However, given that the average citizen is caught on camera 75 times a day, this does not seem to be the easy path for an attacker to gather such information.

Novel Face Biometric Attacks

Face recognition has some unique challenges versus fingerprint recognition, such as the ability to distinguish twins. something that Apple did call out in both its presentation and in its security guide.

That said, Apple claims the TrueDepth camera is able to distinguish twins based on the high resolution of its sensor and minute differences between twins. However, even if Apple can’t distinguish all twins (they note that for identical twins the “probability of a false match is different for twins and siblings that look like you”, and recommend using a passcode to authenticate), one should consider that twins comprise about 3.35% of births according to the CDC. That’s a fairly small percentage of the population to be concerned about. And, again, your evil twin needs to have physical access to the device (though for twins, that’s probably not a stretch of the imagination).

While I’m not aware of any public demonstration of a spoof attack against the TrueDepth camera (or its close cousin, the RealSense camera employed by Windows 10 devices), I have no doubt that someone will figure out how to do it. It could be something high tech that confounds the AI (such as these glasses that fool 2D face recognition systems), or something relatively low-tech (like a 3D printed mask generated from a single picture of your face). Apple claims to have thought of everything (even the mask-based attack), but I have no doubt someone will find a way.

Targeted Device Attacks

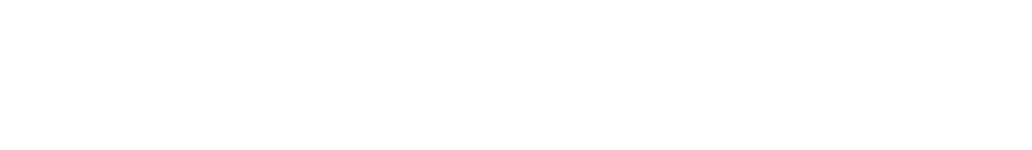

If you accept that the Secure Enclave does its job to defend against remote, scalable attacks, then what remains is attacks on a device in the possession of the attacker. Targeted attacks—those where an attacker has access to the device and the biometric—are extremely difficult to defend against.

For example, the adoption of Touch ID revealed a number of pragmatic issues in deployment. Parents had to worry that their kid might touch their finger to the Touch ID sensor while they slept, thus approving hundreds of dollars in purchases. Banks worried about “friendly fraud” by a disgruntled spouse whose fingerprint was registered on their partner’s device, thus enabling them to clear out their partner’s bank account. And civil liberties watchdogs worried that biometrics could erode protections against violation of the fifth amendment protections against self-incrimination, unlike PINs (which law enforcement cannot compel you to disclose).

While Face ID eliminates the “friendly fraud” risk (as you can only enroll a single face), most of the other risks remain. Apple has attempted to limit the risk of targeted attack by adding stronger lockouts when the wrong biometric is presented multiple times (as happened in the Apple demo), requiring attention for authentication (detecting that your eyes are open and directed at your device), and the ability for the user to trigger the device to fall back to PIN authentication by hitting the power button five times.

However, these do not eliminate that risk entirely. Users facing the risk of a targeted attack are recommended to avoid biometrics are rely on a PIN.

However, even that may not be enough as researchers have demonstrated successful attacks to guess user’s PINs based on subliminal channels, and many users continue to set poor PINs. As we saw earlier this year when border agents forced a US-born NASA scientist to give up his device’s PIN, indicating that avoiding biometrics won’t help you in all situations; although a law enforcement officer that forces a user to unlock their device may render any obtained evidence inadmissible in court, that’s only a comfort if the rule of law applies. Around the world, some users’ threat model may be quite different:

Acceptance of Server-Side Biometrics

One final risk is less about the technology and more about public attitudes towards biometrics.

Many civil liberties advocates are concerned that the propagation of biometrics in mobile devices may acclimatize citizens to the idea of biometrics being used by governments, ultimately leading to Orwellian outcomes. That’s a reasonable concern, but that’s not something Apple itself can address other than by advocating for client-based (versus server-based) biometric solutions. Already, fingerprint biometrics have been deployed in Japan in ATMs for over a decade, iris biometrics have been deployed in India for over one billion users, and the customs agencies globally already use fingerprints and photos to identify travelers at the border. It’s not clear that Apple Face ID will significantly accelerate government biometric adoption beyond its current pace.

If anything, Apple has set a high bar for biometric systems that is being emulated by Android and by Android OEMs. Even the US National Institute for Standards and Technology (NIST) has indicated that client-side comparison of biometrics is preferred in Special Publication 800-63B.

Recommendation: Focus on the Positives

It may not be perfect, but Face ID continues the trend of biometrics delivering both better security and increased usability. That’s a good thing.

Increased Protection of Devices by Default

For all the potential pitfalls of Face ID, it’s important to realize how it continues the trend of improving the vast majority of users’ security. Before Touch ID, only a small percentage of users employed PINs to protect their devices; anyone could lift a device, and have full access to your device and its data. After Touch ID devices had available for a year, 89% of users with Touch ID-enabled devices protected their device against this attack. Face ID will probably only improve this.

Reduced Reliance on Passwords

Touch ID and Face ID open the possibility of moving away from passwords, the toxic waste of online security. For most people, the worry is not that a twin with access to their device will be able to bypass Face ID, but rather a remote attacker who compromises Yahoo will be able to recover millions of users’ passwords and use it to access other accounts (because users typically reuse their password everywhere). By moving towards authentication standards like FIDO that can leverage Touch ID or Face ID to protect online accounts against remote and scalable attacks, we’re dramatically strengthening the defenses that attackers must overcome to compromise our accounts.